import sklearn.datasets as sk_data

X, y, gt_centers = sk_data.make_blobs(n_samples=100, centers=3, n_features=30,

center_box=(-10.0, 10.0), random_state=0, return_centers=True)Clustering In Practice

Clustering in Practice

…featuring \(k\)-means

Today we’ll do an extended example showing \(k\)-means clustering in practice and in the context of the python libraries scikit-learn.

scikit-learn is the main python library for machine learning functions.

Our goals are to learn:

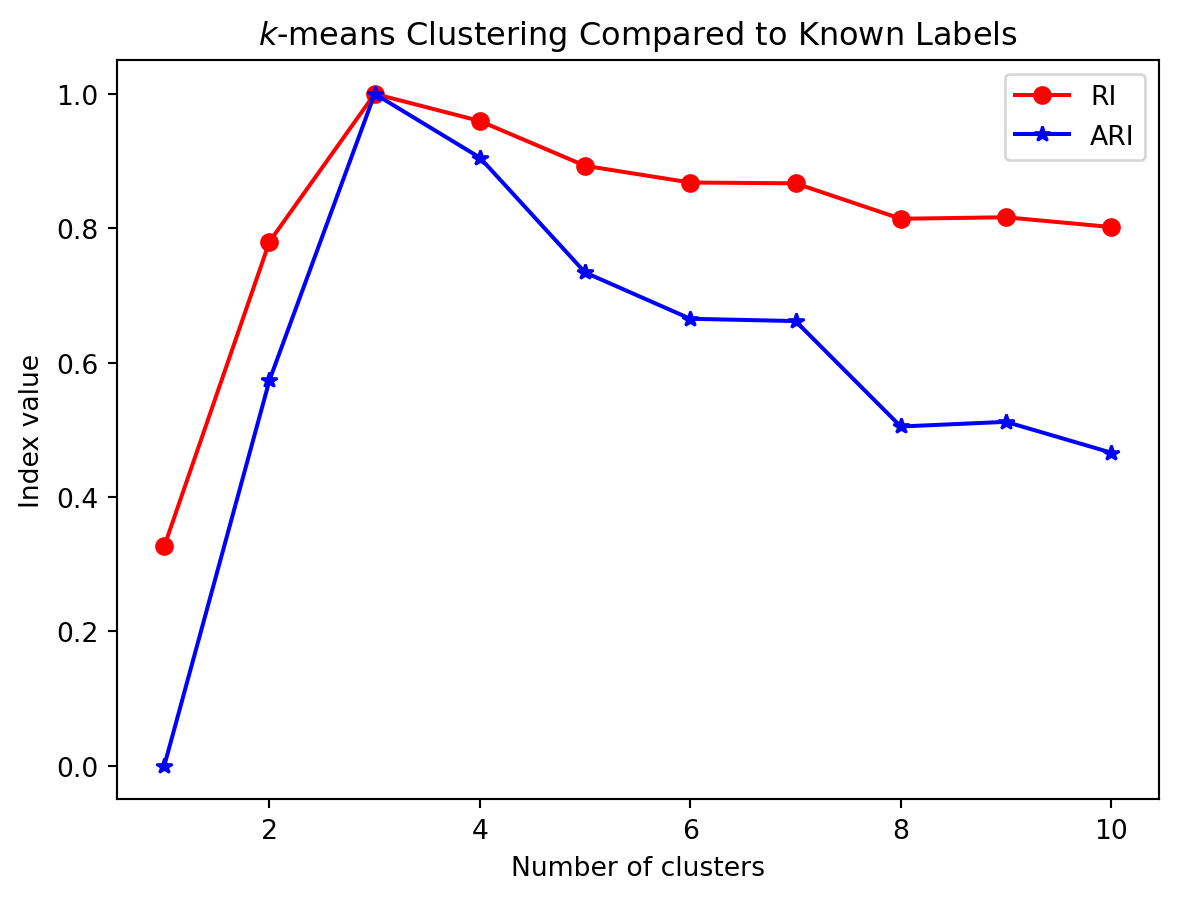

- How clustering is used in practice

- Tools for evaluating the quality of a clustering

- Tools for assigning meaning or labels to a cluster

- Important visualizations

- A little bit about feature extraction for text

Visualization

Training wheels: Synthetic data

Generally, when learning about or developing a new unsupervised method, it’s a good idea to try it out on a dataset in which you already know the “right” answer.

One way to do that is to generate synthetic data that has some known properties.

Among other things, scikit-learn contains tools for generating synthetic data for testing.

We’ll use datasets.make_blobs.

Let’s check the shapes of the returned values:

print("X.shape: ", X.shape)

print("y.shape: ", y.shape)

print("gt_centers: ", gt_centers.shape)X.shape: (100, 30)

y.shape: (100,)

gt_centers: (3, 30)datasets.makeblobs takes as arguments:

n_samples: The number of samples to generaten_features: The number of features, or in other words the dimensionality of each samplecenter_box: The bounds of the cluster centersrandom_state: The random seed for reproducibilityreturn_centers: A boolean flag, True to return the centers so that we have ground truth

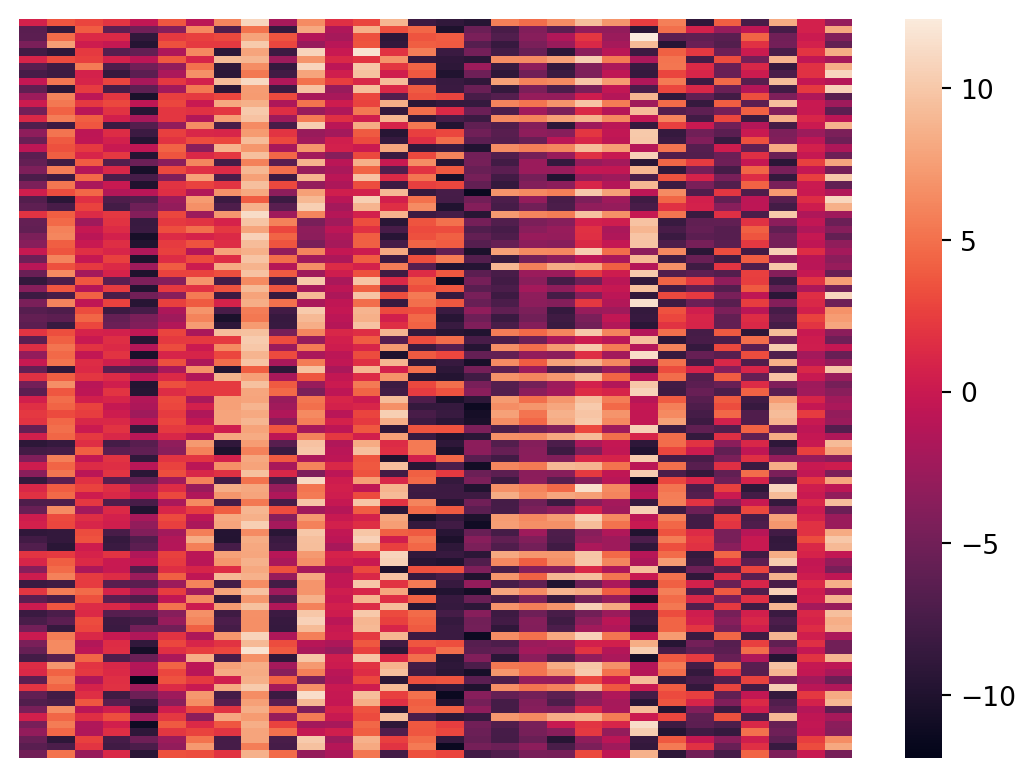

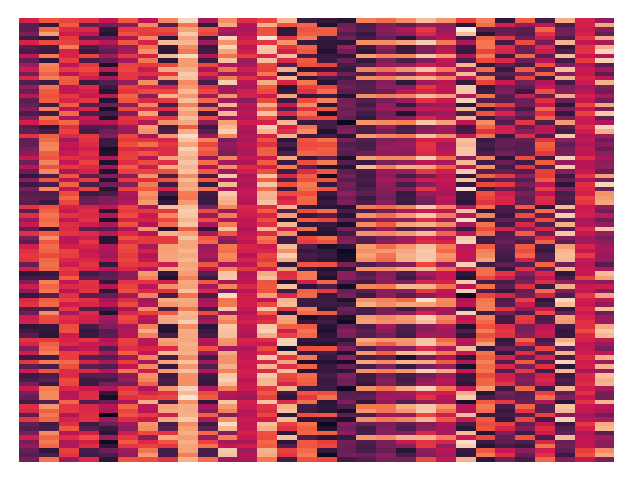

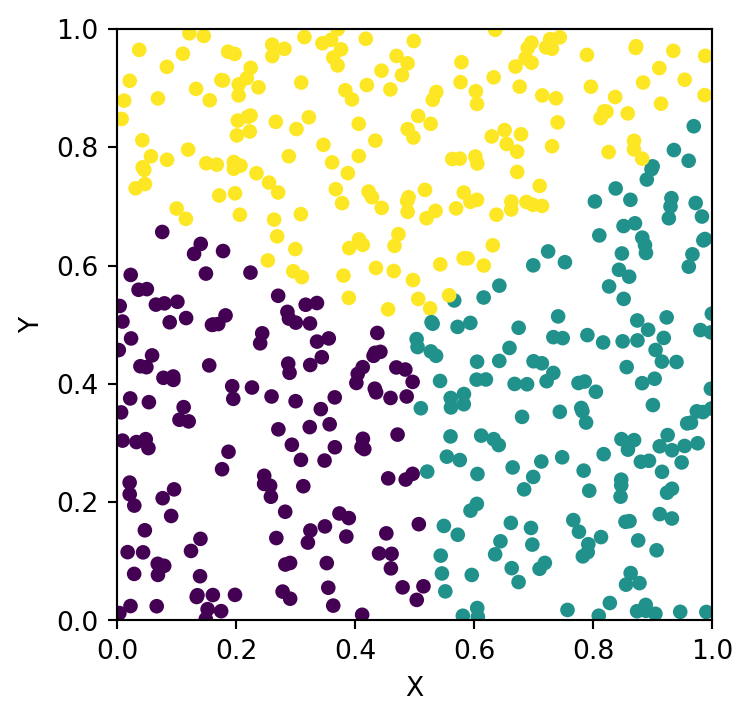

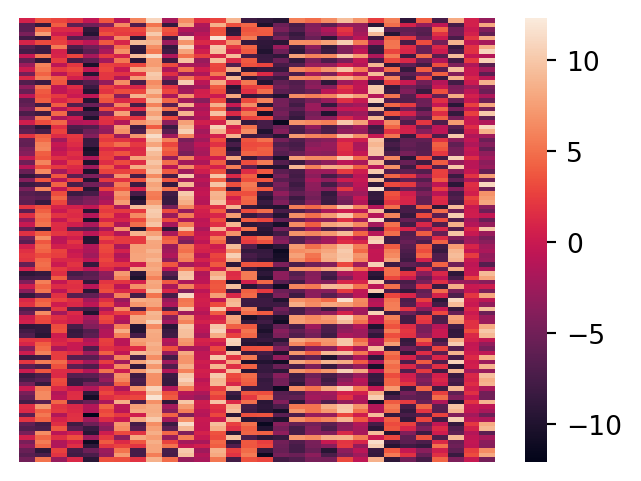

Visualize the Data

To get a sense of the raw data we can inspect it.

For statistical visualization, a good library is Seaborn.

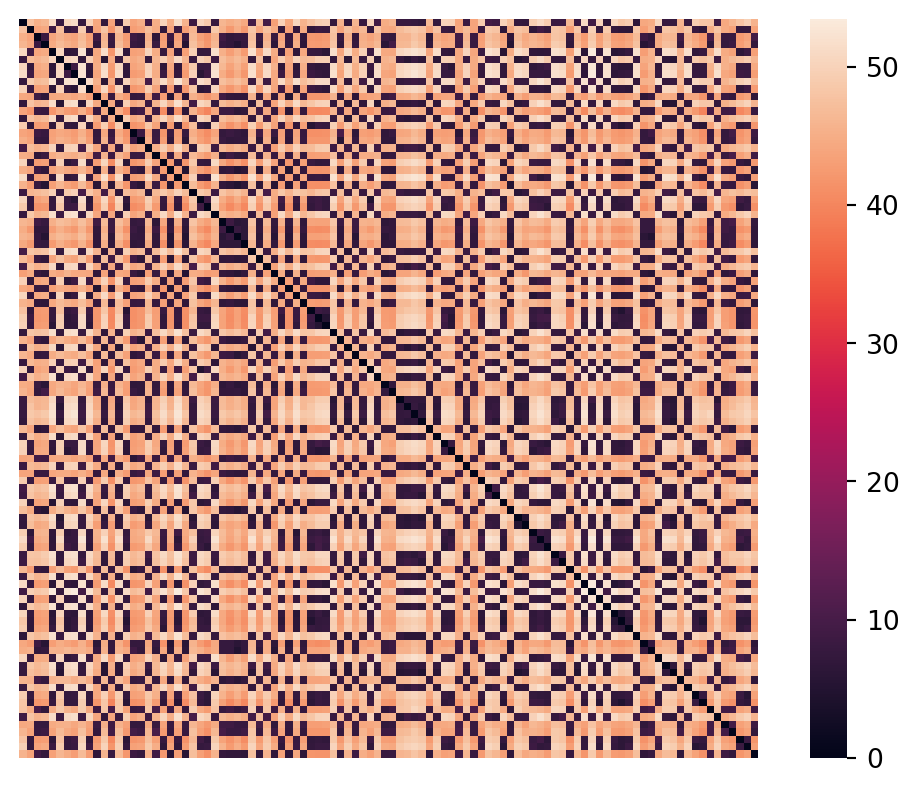

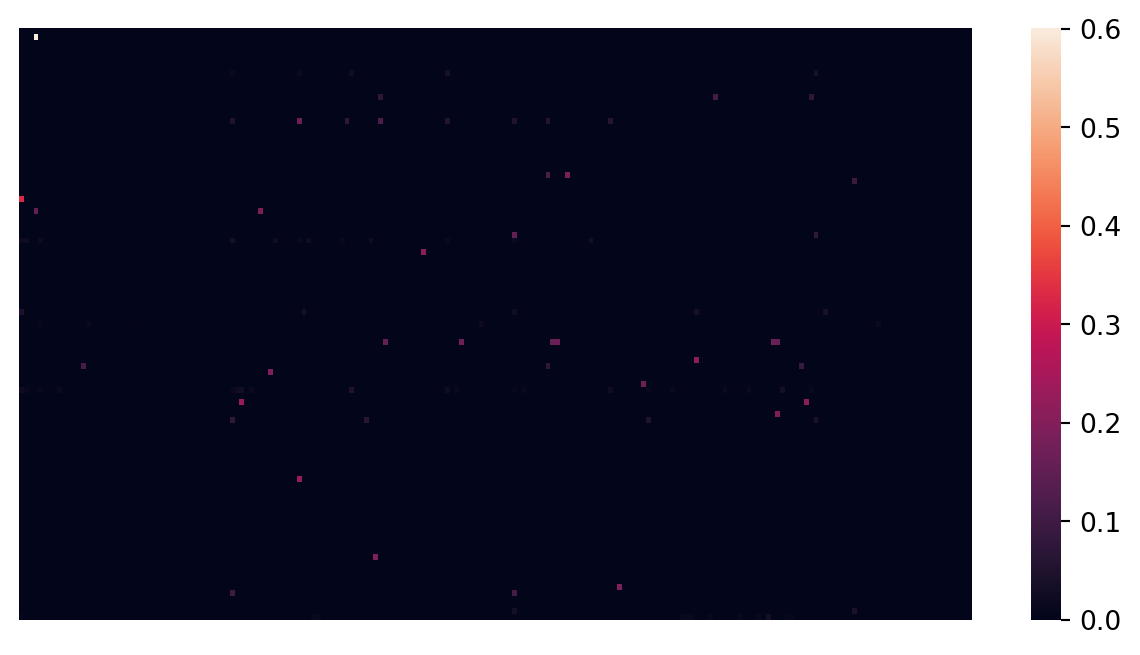

Let’s plot the X data as a matrix heatmap, where every row is a data point and the columns are the features.

Code

import seaborn as sns

import matplotlib.pyplot as plt

# Set the figure size to make the plot smaller

plt.figure(figsize=(7, 5)) # Adjust the width and height as needed

sns.heatmap(X, xticklabels = False, yticklabels = False, linewidths = 0, cbar = True)

plt.show()

Geometrically, these points live in a 30 dimensional space, so we cannot directly visualize their geometry.

This is a big problem that you will run into time and again!

We will discuss methods for visualizing high dimensional data later on.

For now, we will use a method that can turn a set of pairwise distances into an approximate 2-D representation in some cases.

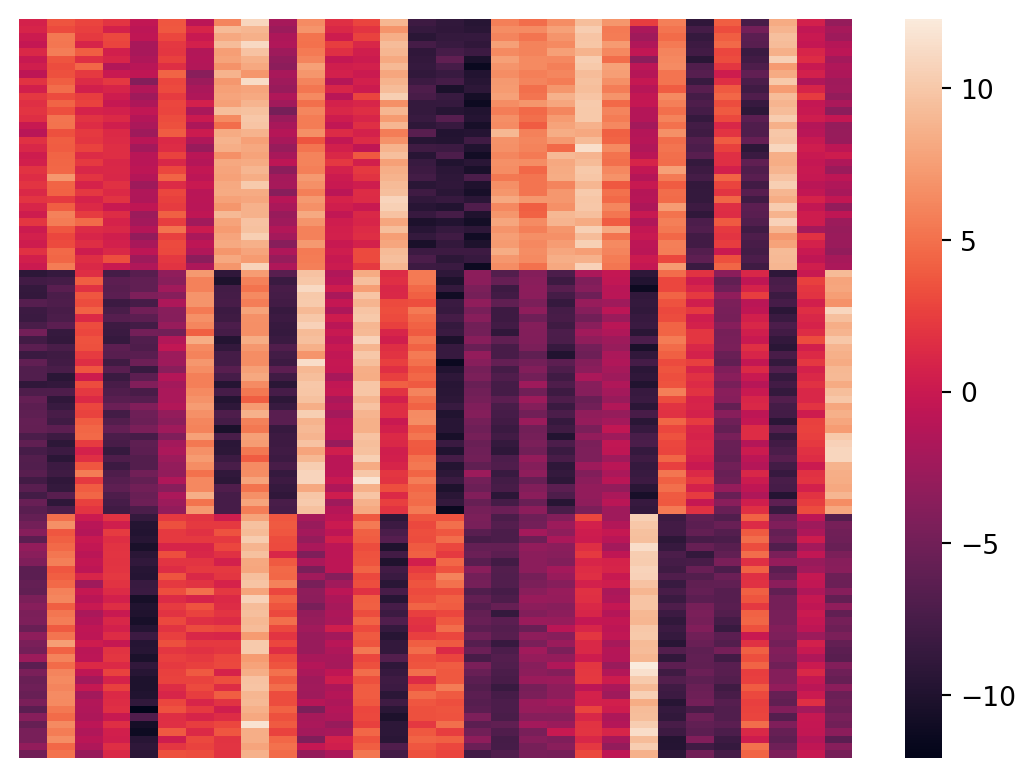

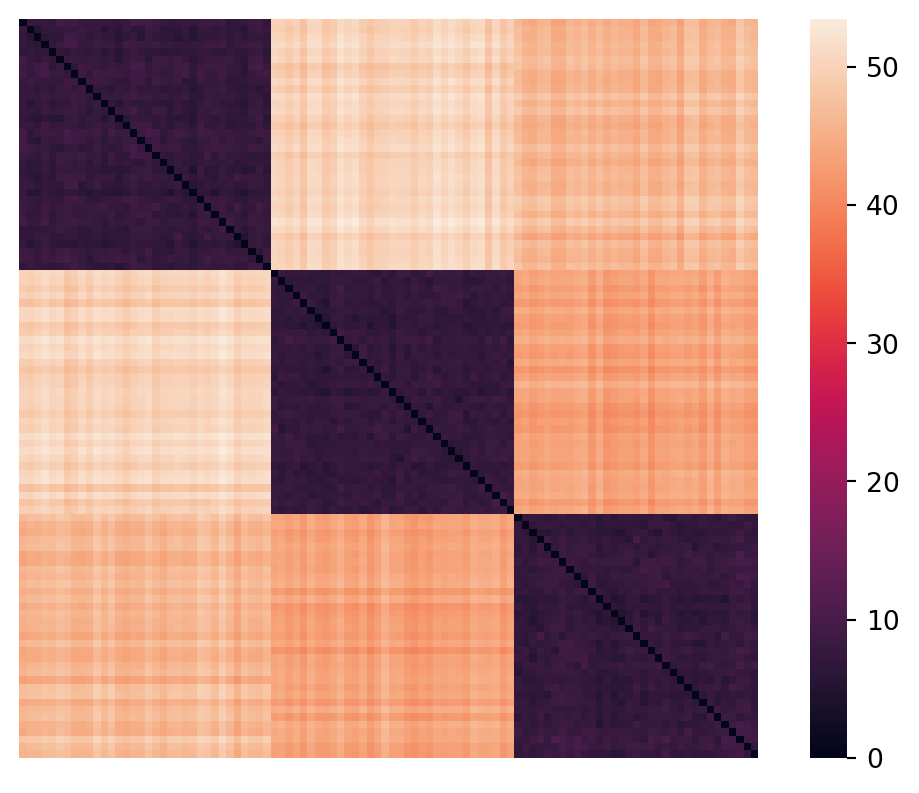

So let’s compute the pairwise distances, in 30 dimensions, for visualization purposes.

We can compute all pairwise distances in a single step using the scikit-learn metrics.euclidean_distances function:

import sklearn.metrics as metrics

euclidean_dists = metrics.euclidean_distances(X)

# euclidean_dists/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ bMatrix shape is: (100, 100)Let’s look at the upper left and lower right corners of the distances matrix.

Upper left 3x3:

[[ 0. 47.74 45.19]

[47.74 0. 43.67]

[45.19 43.67 0. ]]

...

Lower right 3x3:

[[ 0. 8.19 41.82]

[ 8.19 0. 43.41]

[41.82 43.41 0. ]]/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ bNote that this produces a \(100\times100\) symmetric matrix where the diagonal is all zeros (distance from itself).

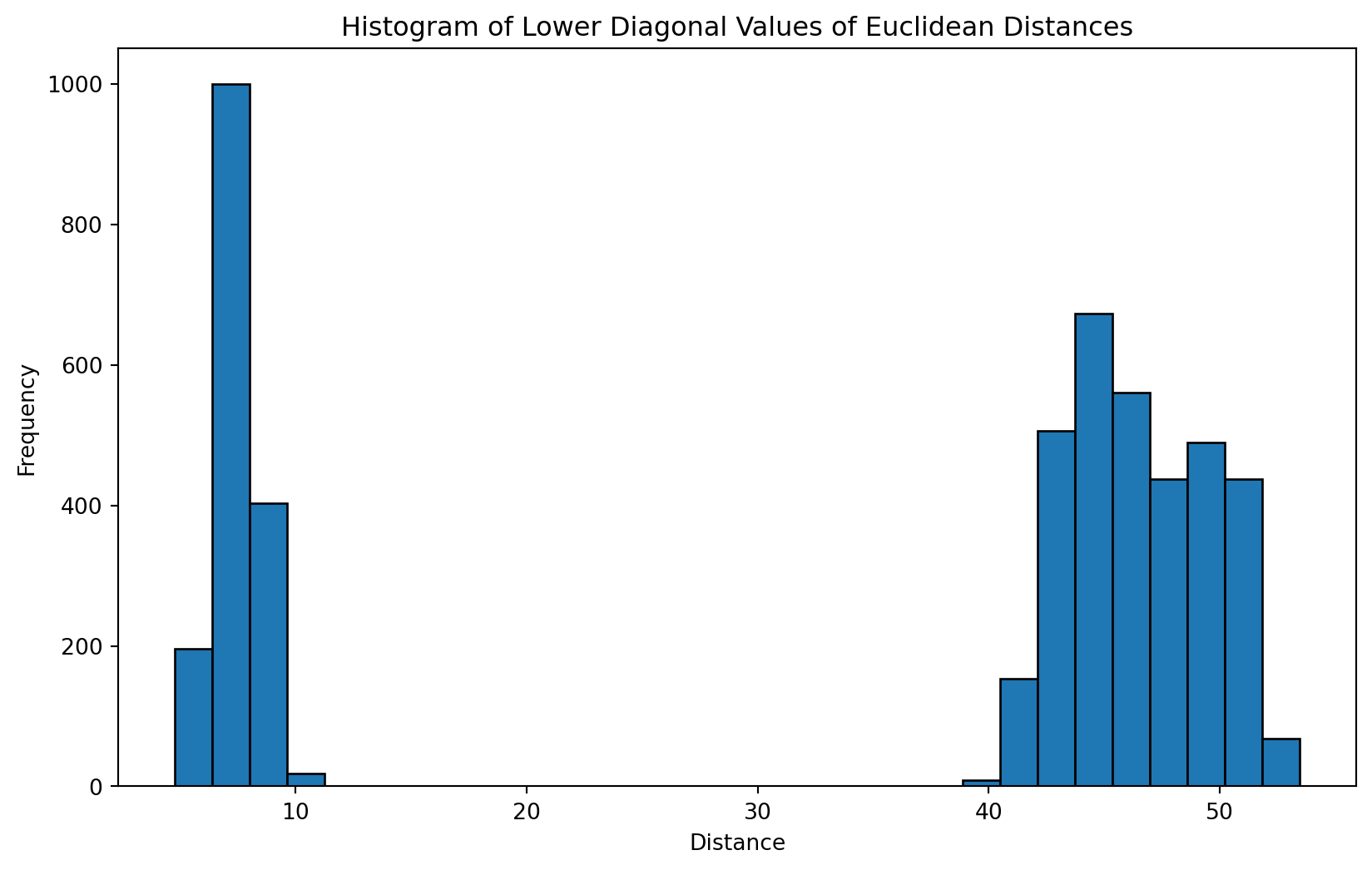

Let’s look at a histogram of the distances.

Code

import numpy as np

import matplotlib.pyplot as plt

import sklearn.metrics as metrics

# Compute the pairwise distances

euclidean_dists = metrics.euclidean_distances(X)

# Extract the lower triangular part of the matrix, excluding the diagonal

lower_triangular_values = euclidean_dists[np.tril_indices_from(euclidean_dists, k=-1)]

# Plot the histogram

plt.figure(figsize=(10, 6))

plt.hist(lower_triangular_values, bins=30, edgecolor='black')

plt.title('Histogram of Lower Diagonal Values of Euclidean Distances')

plt.xlabel('Distance')

plt.ylabel('Frequency')

plt.show()/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

Remember that these are the pairwise distances, in 30 dimensions. So at least with this dataset we see a clean separation presumably between inter-cluster distances and intra-cluster distances.

How would the curse of dimensionality affect this? We discuss the curse of dimensionality later in the course.

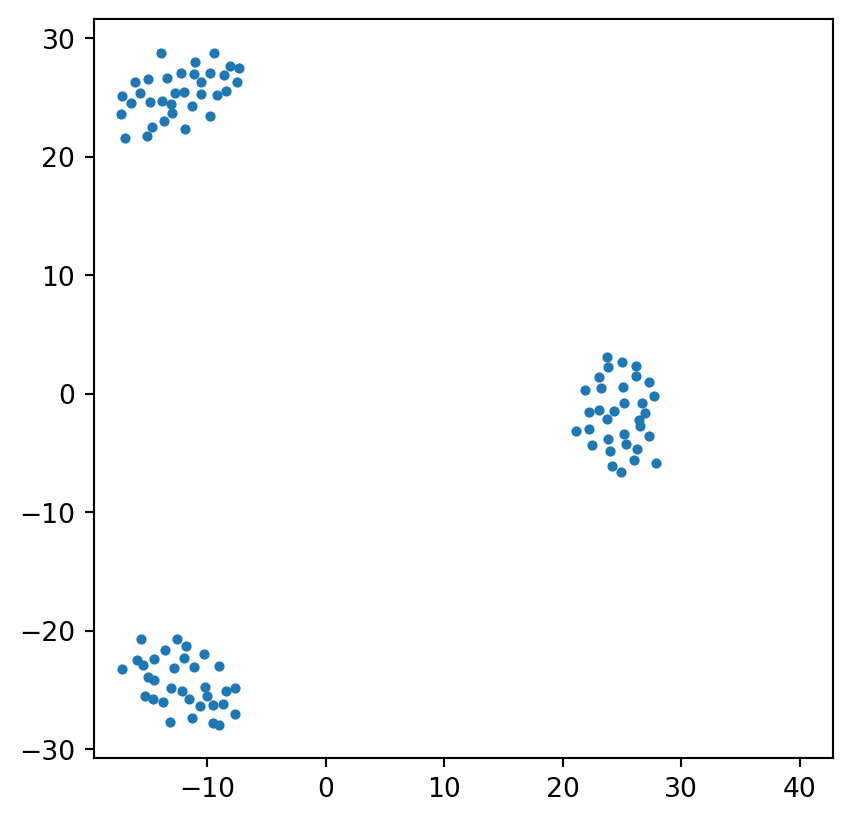

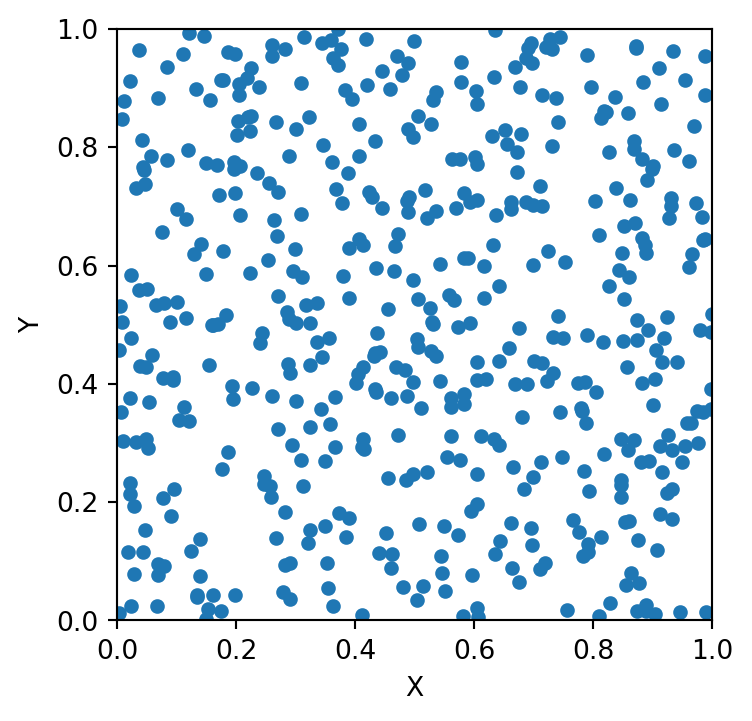

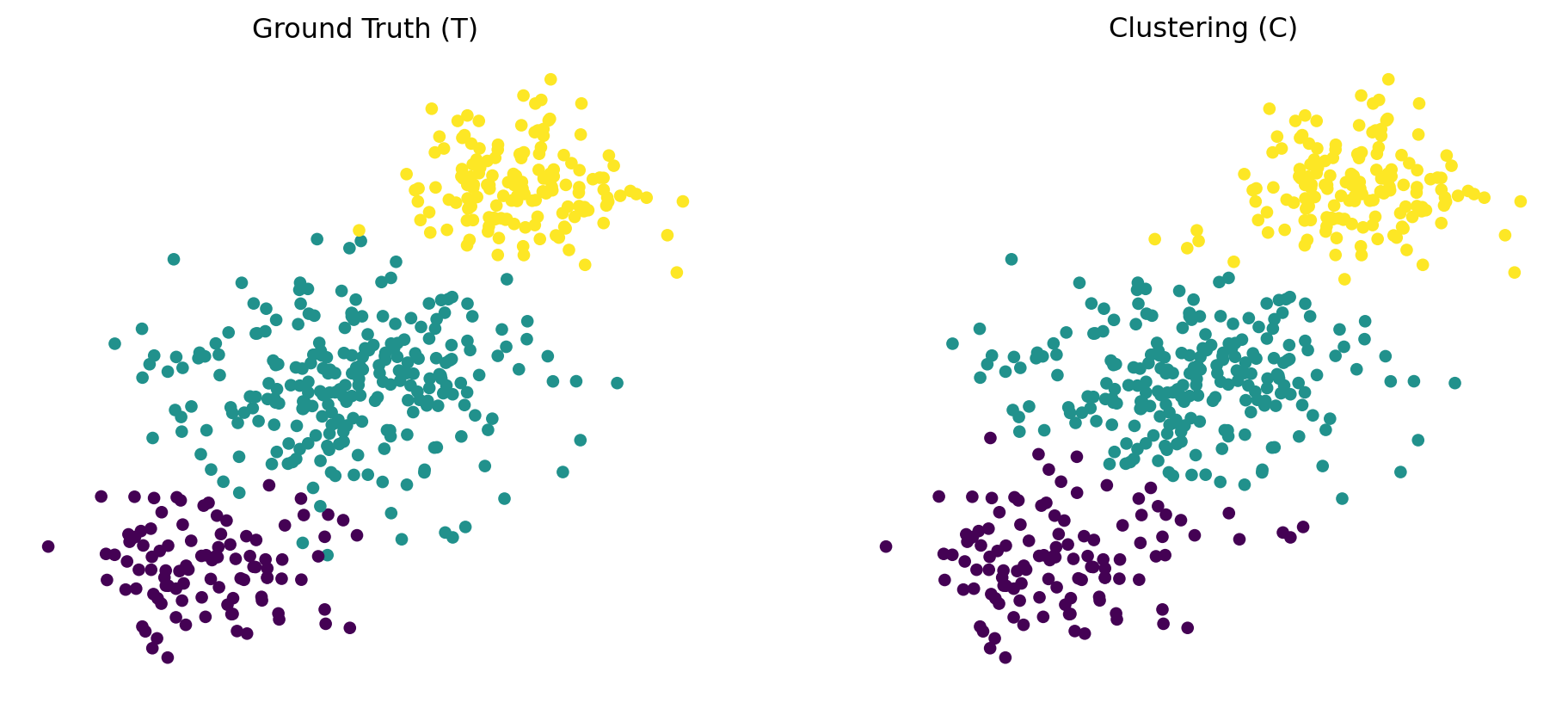

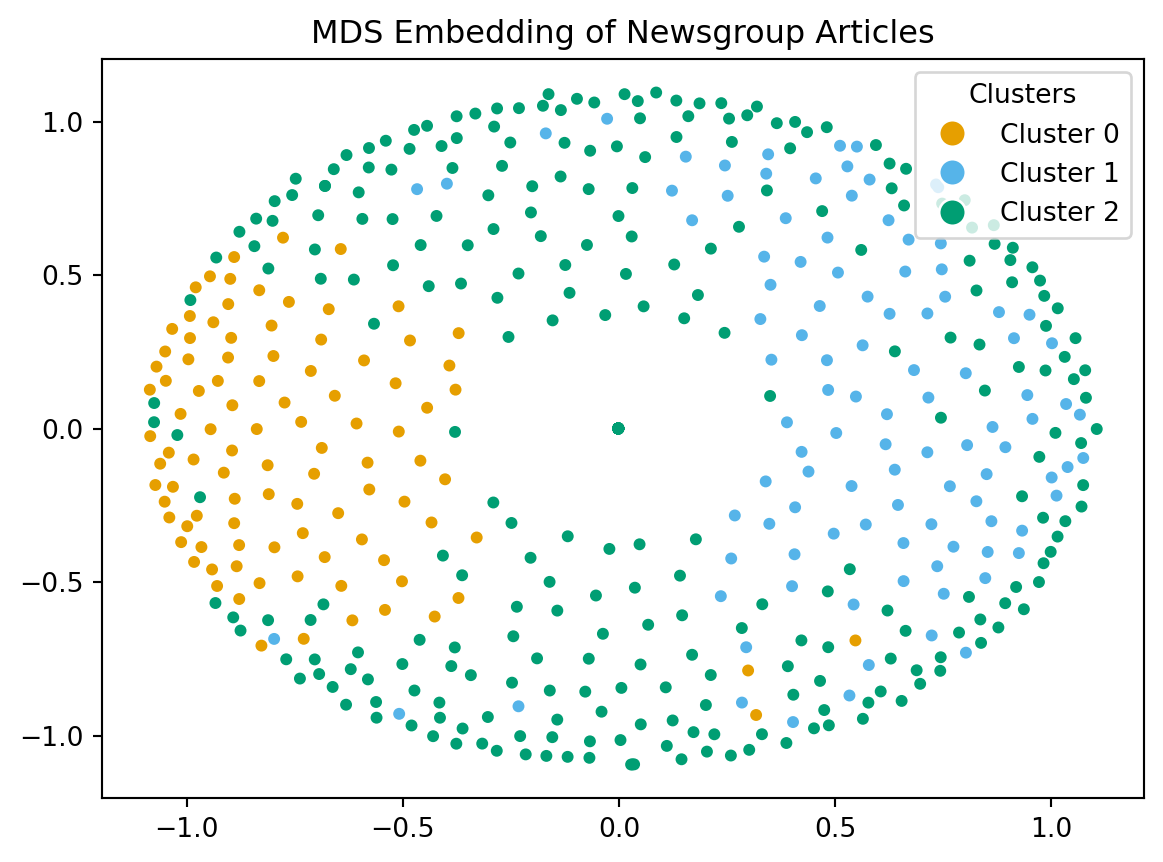

Visualizing with MDS

The idea behind Multidimensional Scaling (MDS) is given a pairwise distance (or dissimilarity) matrix:

- Find a set of coordinates in 1, 2 or 3-D space that approximates those distances as well as possible.

- The points that are close (or far) in high dimensional space should be close (or far) in the reduced dimension space.

MDS Continued

Note that there are two forms of MDS:

- Metric MDS, of which Classical MDS is a special case, and has a closed form solution based on the eigenvectors of the centered distance matrix.

- \(O(n^3)\) time complexity and \(O(n^2)\) space complexity.

- Non-Metric MDS, which tries to find a non-parametric monotonic relationship between the dissimilarities and the target coordinates through an iterative approach.

- \(O(n^2)\) time complexity.

Question If non-metric is faster, why not always use it?

Answer: Metric MDS is more faithful to preserving the relative distances between poijnts, where as non-metric MDS preserves the order but not necessarily faithful to the distances.

In general, MDS may not always work well if, for example the dissimilarities are not well modeled by a metric like Euclidean distance.

MDS Visualization Result

Code

import sklearn.manifold

import matplotlib.pyplot as plt

mds = sklearn.manifold.MDS(n_components=2, max_iter=3000, eps=1e-9, random_state=0,

dissimilarity = "precomputed", n_jobs = 1)

fit = mds.fit(euclidean_dists)

pos = fit.embedding_

plt.scatter(pos[:, 0], pos[:, 1], s=8)

plt.axis('square');/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: overflow encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: invalid value encountered in matmul

ret = a @ b

/Users/tgardos/Source/courses/ds701/DS701-Course-Notes/.venv/lib/python3.10/site-packages/sklearn/utils/extmath.py:203: RuntimeWarning: divide by zero encountered in matmul

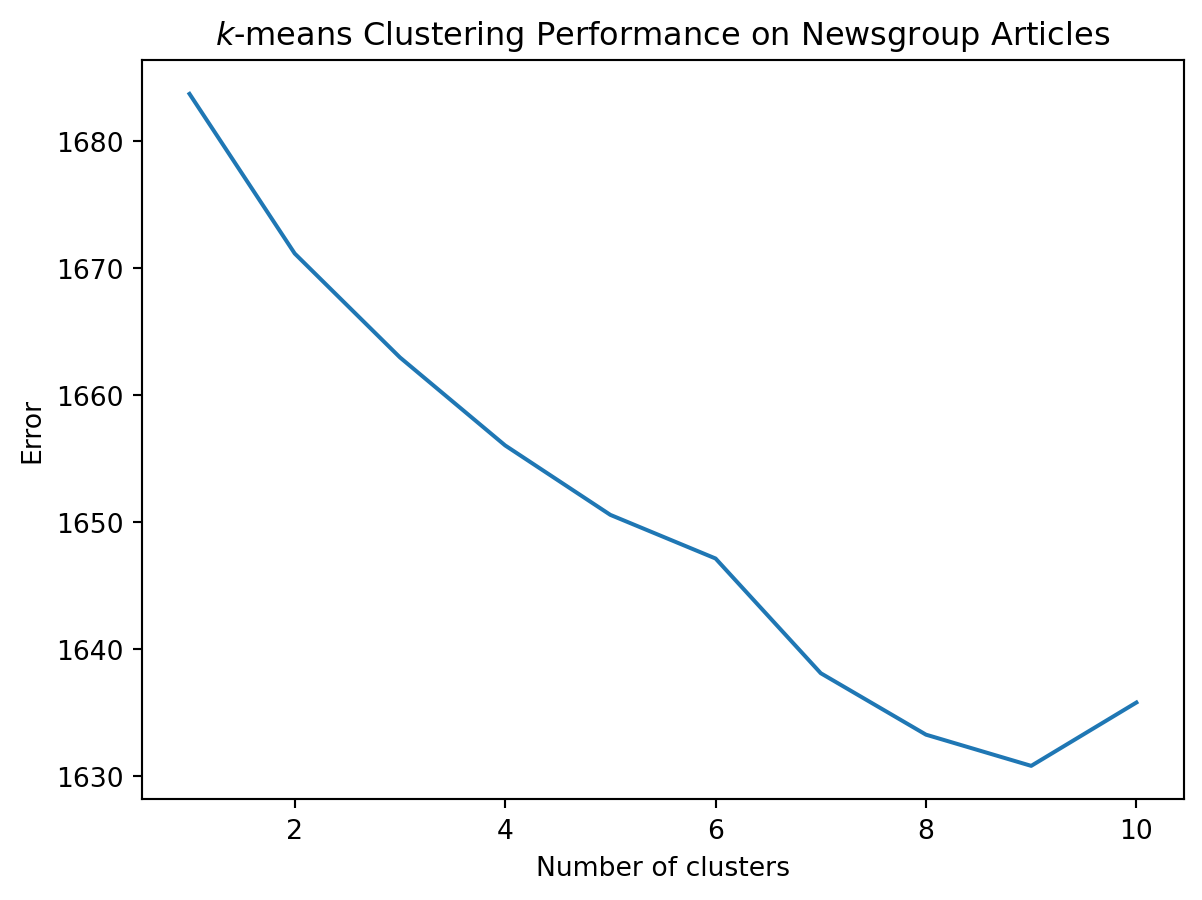

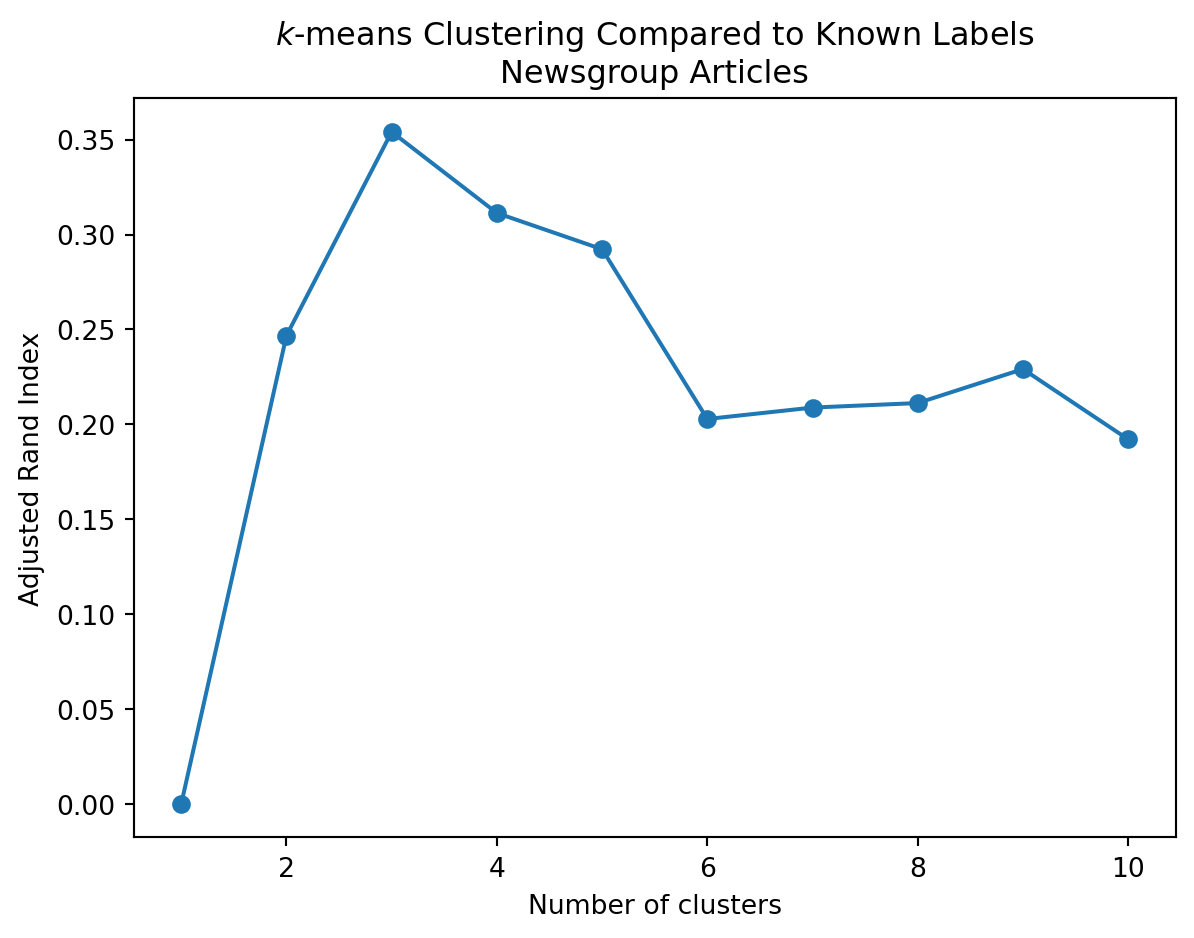

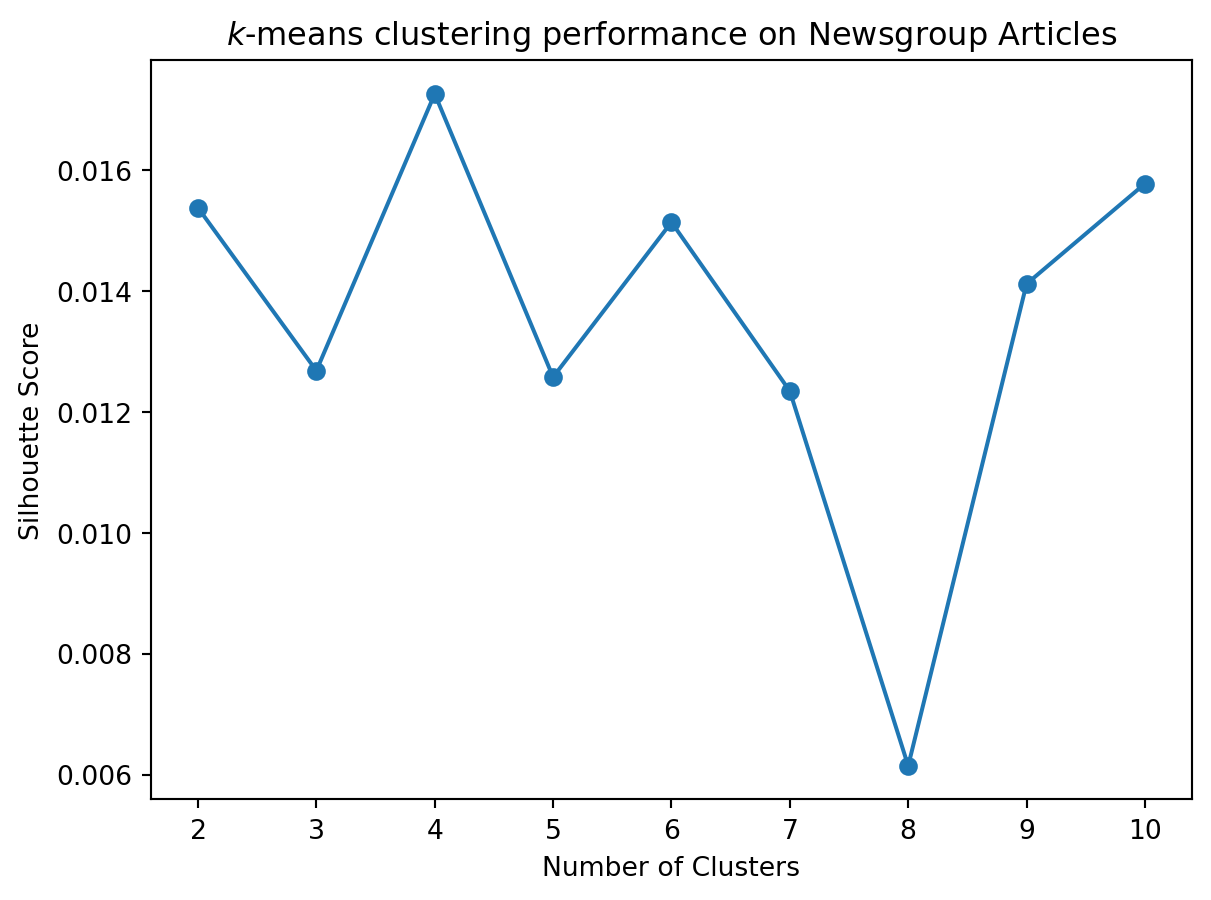

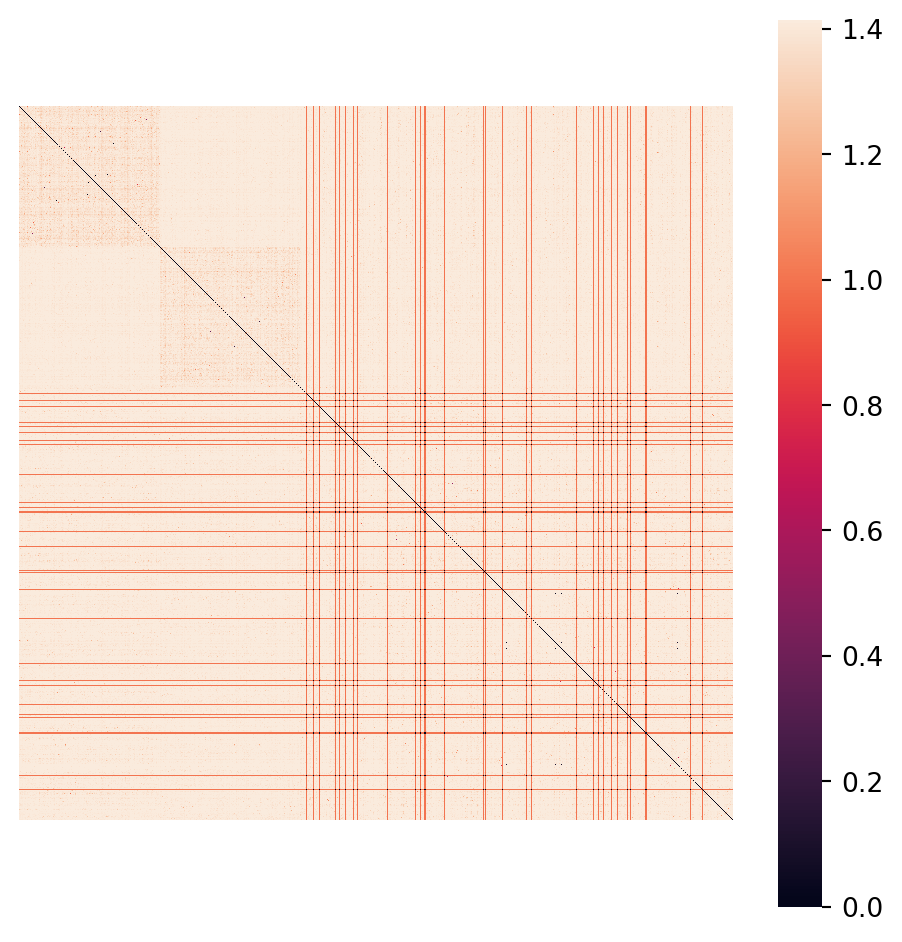

ret = a @ b